Container Platform#

Prerequisites#

Docker installed

Linux or Windows with WSL2

Python 3.8/3.9/3.10/3.11/3.12 x64-bit (https://www.python.org/downloads/release)

Create a virtual environment

There are multiple ways to create a virtual environment. Below is an example using

venv:python -m venv container_env

Activate virtual environment

On Windows:

container_env\Scripts\activate

On Linux:

source container_env/bin/activate

Install container platform

pip install idmtools[container] --index-url=https://packages.idmod.org/api/pypi/pypi-production/simple

Enable Developer Mode on Windows

Open Settings

Click on Update & Security

Click on For developers

Select Developer mode

Enable long file path support on Windows if needed

Windows file paths have a 255-character length limitation. To enable long path support, configure the “Enable Long Path Support” setting in the Windows Group Policy Editor. For more details, refer to Autodesk Support Article

ContainerPlatform#

The ContainerPlatform allows the use of Docker containers and the ability to run jobs locally. This platform leverages Docker’s containerization capabilities to provide a consistent and isolated environment for running computational tasks. The ContainerPlatform is responsible for managing the creation, execution, and cleanup of Docker containers used to run simulations. It offers a high-level interface for interacting with Docker containers, allowing users to submit jobs, monitor their progress, and retrieve results.

For more details on the architecture and the packages included in idmtools and ContainerPlatform, please refer to the documentation

(Architecture and packages reference).

Key features#

Docker Integration: Ensures that Docker is installed and the Docker daemon is running before executing any tasks.

Experiment and Simulation Management: Provides methods to run and manage experiments and simulations within Docker containers.

Volume Binding: Supports binding host directories to container directories, allowing for data sharing between the host and the container.

Container Validation: Validates the status and configuration of Docker containers to ensure they meet the platform’s requirements.

Script Conversion: Converts scripts to Linux format if the host platform is Windows, ensuring compatibility within the container environment.

Job History Management: Keeps track of job submissions and their corresponding container IDs for easy reference and management.

Minimal libraries and packages in Docker image: Requires only Linux os, python3, mpich installed in docker image. ContainerPlatform will bind the host directory to the container directory for running the simulation.

Flexible simulation directory: The user can customize the simulation output’s folder structure by including/excluding the suite, experiment or simulations names in the simulation path.

ContainerPlatform attributes#

job_directory: The directory where job data is stored.

docker_image: The Docker image to run the container.

extra_packages: Additional packages to install in the container.

data_mount: The data mount point in the container.

user_mounts: User-defined mounts for additional volume bindings.

container_prefix: Prefix for container names.

force_start: Flag to force start a new container.

new_container: Flag to start a new container.

include_stopped: Flag to include stopped containers in operations.

debug: Flag to enable debug mode.

container_id: The ID of the container being used.

max_job: The maximum number of jobs to run in parallel.

retries: The number of retries to attempt for a job.

ntasks: Number of MPI processes. If greater than 1, it triggers mpirun.

Usage#

The ContainerPlatform class is typically used to run computational experiments and simulations within Docker containers, ensuring a consistent and isolated environment. It provides various methods to manage and validate containers, submit jobs, and handle data volumes.

Example#

This example demonstrates how to use the ContainerPlatform class to run a simple command task within a Docker container.

Create a Python file named example_demo.py on your host machine and add the following code:

from idmtools.entities.command_task import CommandTask

from idmtools.entities.experiment import Experiment

from idmtools_platform_container.container_platform import ContainerPlatform

# Initialize the platform

from idmtools.core.platform_factory import Platform

platform = Platform('Container', job_directory="destination_directory")

# Or

# platform = ContainerPlatform(job_directory="destination_directory")

# Define task

command = "echo 'Hello, World!'"

task = CommandTask(command=command)

# Run an experiment

experiment = Experiment.from_task(task, name="example")

experiment.run(platform=platform)

To run example_demo.py in the virtual environment on the host machine:

python example_demo.py

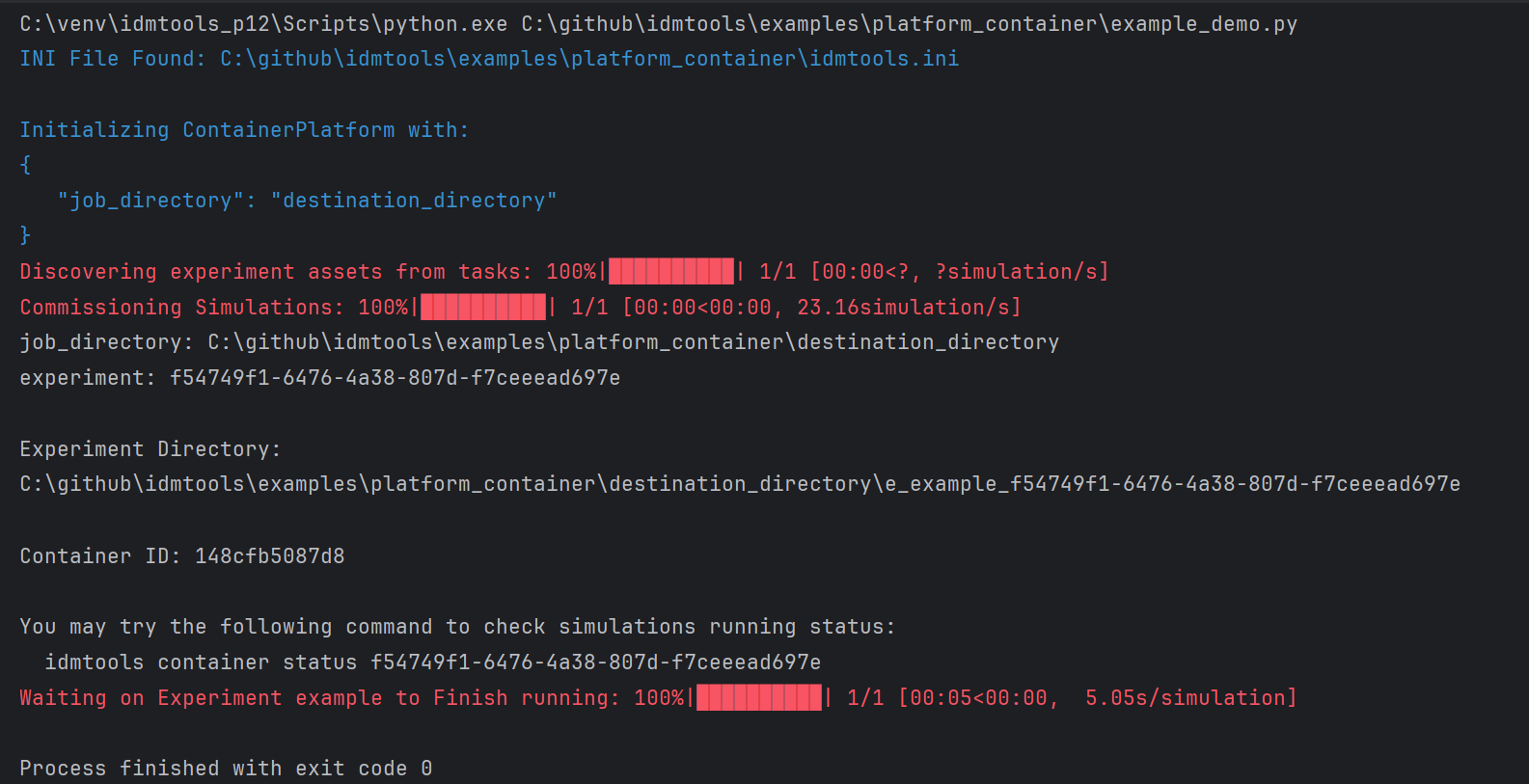

The running this example will output the following:

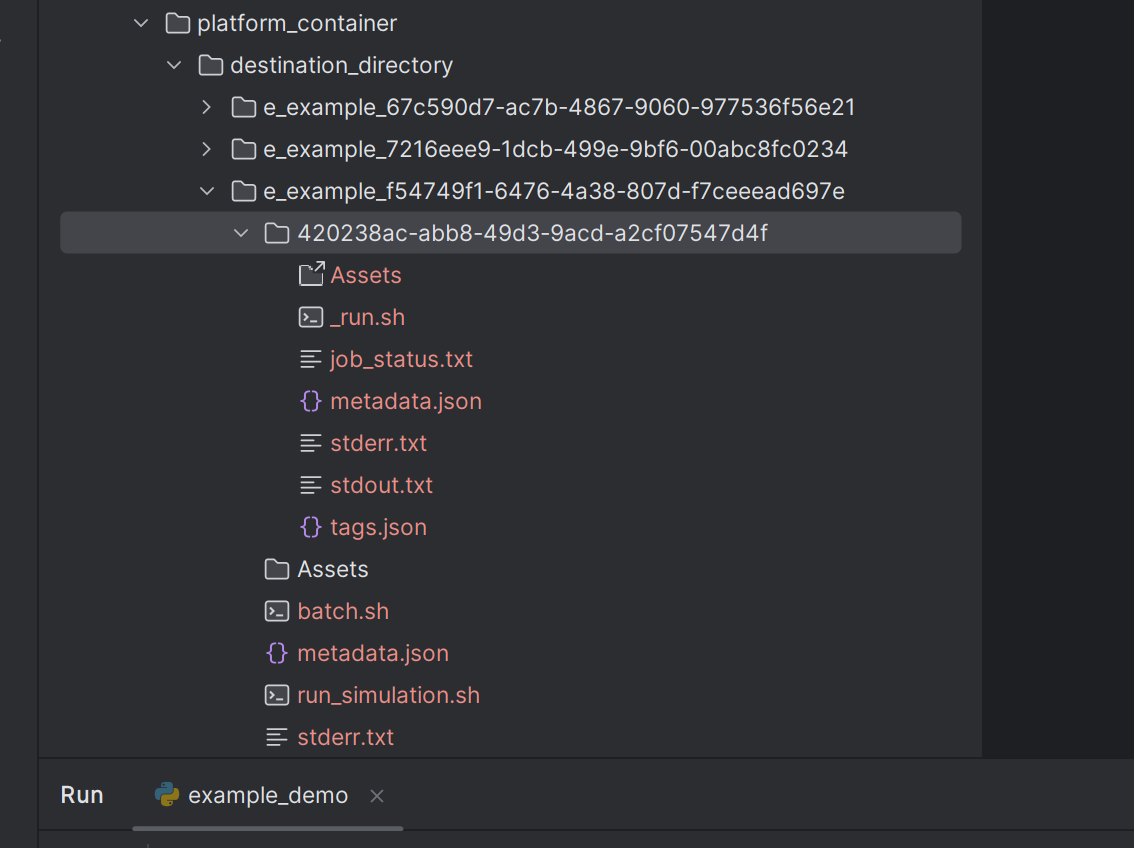

The output will be saved in the destination_directory folder on the host machine:

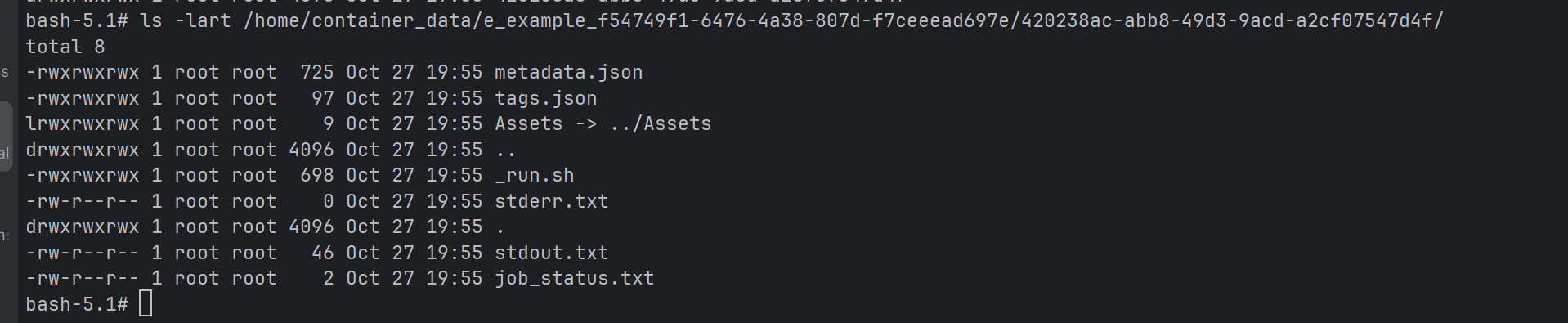

User can also view the same results inside the Docker container:

More examples#

Run the following included Python example to submit and run a job on your Container platform:

# This example demonstrates how to run a simulation using a container platform alias 'CONTAINER'.

import os

import sys

from functools import partial

from typing import Any, Dict

from idmtools.builders import SimulationBuilder

from idmtools.core.platform_factory import Platform

from idmtools.entities.experiment import Experiment

from idmtools.entities.simulation import Simulation

from idmtools.entities.templated_simulation import TemplatedSimulations

from idmtools_models.python.json_python_task import JSONConfiguredPythonTask

# job dir is where the experiment will be run.

# Define Container Platform. For full list of parameters see container_platform.py in idmtools_platform_container

platform = Platform("Container", job_directory="DEST")

# Define path to assets directory

assets_directory = os.path.join("..", "python_model", "inputs", "python", "Assets")

# Define task

task = JSONConfiguredPythonTask(script_path=os.path.join(assets_directory, "model.py"), parameters=(dict(c=0)))

task.python_path = "python3"

# Define templated simulation

ts = TemplatedSimulations(base_task=task)

ts.base_simulation.tags['tag1'] = 1

# Define builder

builder = SimulationBuilder()

# Define partial function to update parameter

def param_update(simulation: Simulation, param: str, value: Any) -> Dict[str, Any]:

return simulation.task.set_parameter(param, value)

# Let's sweep the parameter 'a' for the values 0-2

builder.add_sweep_definition(partial(param_update, param="a"), range(3))

# Let's sweep the parameter 'b' for the values 0-4

builder.add_sweep_definition(partial(param_update, param="b"), range(5))

ts.add_builder(builder)

# Create Experiment using template builder

experiment = Experiment.from_template(ts, name="python example")

# Add our own custom tag to experiment

experiment.tags["tag1"] = 1

# And all files from assets_directory to experiment folder

experiment.assets.add_directory(assets_directory=assets_directory)

experiment.run(platform=platform, wait_until_done=True)

# run following command to check status

print("idmtools file DEST status --exp-id " + experiment.id)

sys.exit(0 if experiment.succeeded else -1)

Folder structure#

By default, idmtools will generate simulations with the following structure for container, slurm and general platforms:

job_directory/s_suite_name_uuid/e_experiment_name_uuid/simulation_uuid

job_directory is the base directory for suite, experiment and simulations.

s_suite_name_uuid is the suite directory, where the suite name (truncated to a maximum of 30 characters) is prefixed with

s_, followed by its unique suite UUID.e_experiment_name_uuid is the experiment directory, where the experiment name (also truncated to 30 characters) is prefixed with

e_, followed by its unique experiment UUID.simulation_uuid is only simulation uuid.

Suite is optional. If the user does not specify a suite, the folder will be:

job_directory/e_<experiment_name>_<experiment_uuid>/simulation_uuid

Example:

If you create a suite named: my_very_long_suite_name_for_malaria_experiment

and an experiment named: test_experiment_with_calibration_phase,

idmtools will automatically truncate both names to a maximum of 30 characters and apply the prefixes s_ for suites and e_ for experiments, resulting in a path like:

The actual folder looks like:

job_directory/

└── s_my_very_long_suite_name_for_m_12345678-9abc-def0-1234-56789abcdef0/

└── e_test_experiment_with_calibrati_abcd1234-5678-90ef-abcd-1234567890ef/

└── 7c9e6679-7425-40de-944b-e07fc1f90ae7/

Or for no suite case:

job_directory/

└── e_test_experiment_with_calibrati_abcd1234-5678-90ef-abcd-1234567890ef/

└── 7c9e6679-7425-40de-944b-e07fc1f90ae7/

The user can customize the folder structure by setting the following parameters in the idmtools.ini file:

name_directory = False— The suite and experiment names will be excluded in the simulation path.sim_name_directory = True— The simulation name will be included in the simulation path.

Additionally, You can view the same results inside the Docker container at /home/container-data/<suite_path>/<experiment_path>/<simulation_path>. The container-data directory is the default data mount point in the container.

Note: If running the script on Windows, be aware of the file path length limitation (less than 255 characters). If you really need to run the script with long file paths, you can set the Enable Long Path Support in Windows Group Policy Editor. refer to https://www.autodesk.com/support/technical/article/caas/sfdcarticles/sfdcarticles/The-Windows-10-default-path-length-limitation-MAX-PATH-is-256-characters.html.

Note

WorkItem is not supported on the Container Platform as it is not needed in most cases since the code already runs on user’s local computer.

AssetCollection creation or referencing to an existing AssetCollection are not supported on the Container Platform with current release. If you’ve used the COMPS Platform, you may have scripts using these objects. You would need to update these scripts without using these objects in order to run them on the Container Platform.

For example, you may need to remove the following code which used in COMPS Platform:

asset_collection = AssetCollection.from_asset_collection_id('50002755-20f1-ee11-aa12-b88303911bc1')

Run with Singularity is not needed with Container Platform. If you take existing COMPS example and try to run it with Container Platform, you may need to remove the code that setups the singularity image.

For example for singularity, you make need to remove the following code which used in COMPS Platform:

emod_task.set_sif(sif_path)